Empowering Every Accelerator

to Lead the AI Revolution

GigaIO delivers high performance, scalable edge-to-core AI platforms for all accelerators that are easy to deploy and manage:

SC25 BIRDS OF A FEATHER SESSION

The Future of Open Interconnects for AI

The rapid evolution of AI workloads is pushing the boundaries of interconnect design, requiring innovative approaches to balance performance, scalability, and efficiency. This BOF will explore cutting-edge advancements in AI interconnects and their impact on performance optimization. Discussion topics include:

- The evolving role of open standards in AI infrastructure

- Scale-out vs. scale-up networking solutions for AI workloads

- Innovative interconnect designs that redefine performance limits

- Future-proofing AI networks: what’s next?

-

Konstantin Rygol,

Artificial Intelligence Manager,

GigaIO

-

John Ihnotic

Vice President, Engineering,

GigaIO

-

Dr. Hemal V. Shah,

Distinguished Engineer in the Core Switching Group (CSG),

Broadcom

-

Kris Buggenhout,

Principal Systems Design Engineer,

AMD

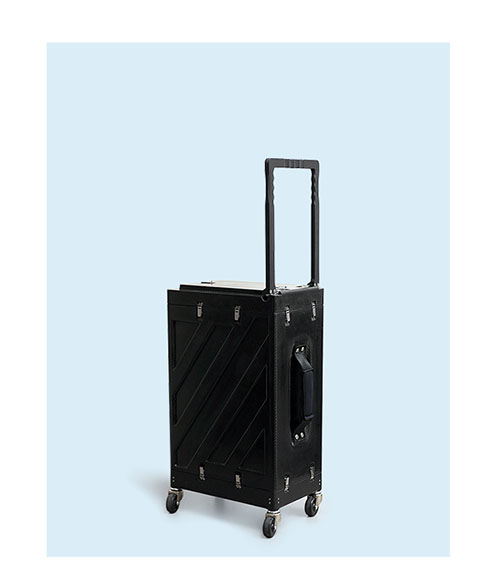

Perfectly paired, powerfully small.

Like root beer & ice cream, some combinations just work.

Together, GigaIO and SourceCode co-designed Gryf, the world’s first suitcase-sized AI supercomputer that brings datacenter-class processing and real AI to the far edge.

Proof that when innovative teams meet, great things happen.

BETTER TOGETHER

Redefining Scalable AI Infrastructure: Seamlessly Bridge from Edge to Core

Run AI jobs anywhere with Gryf, or reduce power draw with SuperNODE. Both are easy to deploy and manage, utilizing GigaIO’s patented AI fabric that provides ultra-low latency and direct memory-to-memory communication between GPUs for near-perfect scaling for AI workloads. GigaIO’s systems offer substantial benefits in terms of cost and energy efficiency, with up to 42% cost savings and 66% power savings.

Separate Pre-fill and Decode Operations to Optimize GPUs via Disaggregated Inferencing

GigaIO’s open architecture supports NVIDIA and non-NVIDIA GPUs and inference cards. Its solutions seamlessly enable disaggregated inferencing to provide superior performance and near-perfect scaling for:

- LLM inference

- RAG

- Fine-tuning

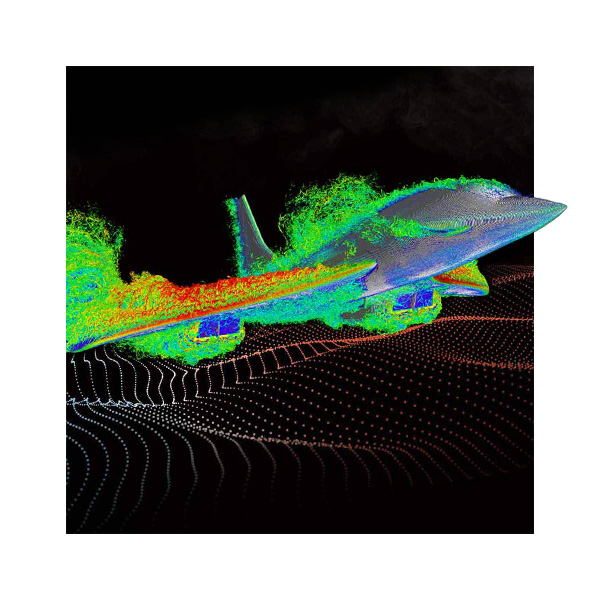

- Breakthrough performance in engineering and scientific workloads (including CFD)

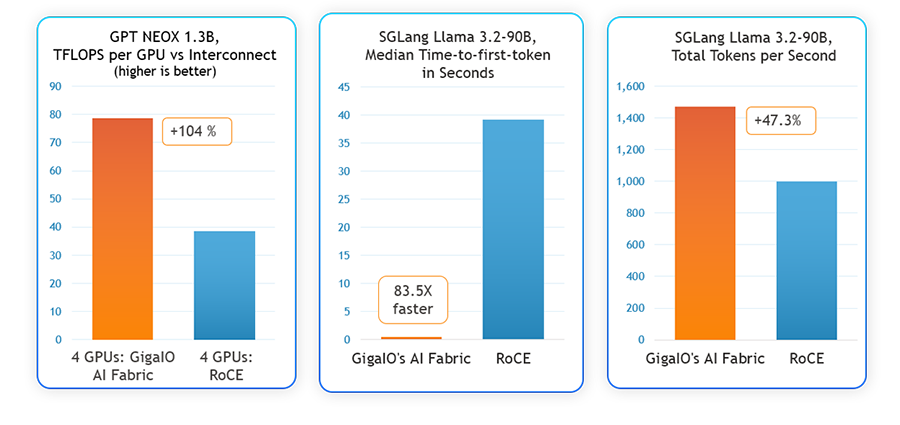

Breakthrough

AI Performance:

2x Faster Training and Fine Tuning with 83.5x Lower Latency

As AI models grow more complex, many teams face a critical bottleneck they didn’t anticipate: interconnect inefficiency.

Want smarter interconnects for power-constrained AI?

See how our AI fabric outperforms RoCE across NVIDIA A100 and AMD MI300X on training and inference benchmarks.

Our latest benchmarks reveal that GigaIO’s AI fabric outperforms traditional RoCE Ethernet in every AI workload, empowering organizations to:

Gryf: The World’s First Suitcase-sized AI Supercomputer

Run AI jobs anywhere with Gryf, the world’s first portable AI supercomputer that brings datacenter-class computing power directly to the edge. Co-designed with SourceCode to be airline cabin-friendly, Gryf is purpose-built for go-anywhere insights, allowing users to quickly transform the vast amounts of sensor data collected at the edge into actionable solutions.

Come meet Gryf at our booth.

SuperNODE: The World’s Most Powerful Scale-up AI Platform

Reduce power draw with SuperNODE, the world’s most powerful and energy-efficient scale-up AI computing platform. Now offered with AMD Instinct™ MI355X accelerators, NVIDIA GPUs, d-Matrix and Tenstorrent accelerators, and others, SuperNODE streamlines inference and fine-tuning with a single, unified memory space. Check out its record-breaking MLPerf Inference benchmark performance!

Check out the latest test results at our booth.

Supercharge Your CFD

SuperNODE, the world’s first 32-GPU server, flawlessly executed one of the largest CFD simulations ever — the Concorde landing at 40 BILLION cells resolution — in a mere 33 hours. The secret? Massive GPU memory, making the complex fast and easy.

See the video at our booth.

GigaIO’s Next-Gen AI Fabric

Smarter interconnects can have a transformative impact on AI infrastructure. GigaIO’s patented AI fabric provides ultra-low latency and direct memory-to-memory communication between accelerators. In recent benchmarks, it achieved better results than RoCE across the entire AI work chain, including 2x faster training and fine-tuning and 83x better time to first token for inferencing.

Learn more at our booth.