Empowering Every Accelerator

to Lead the AI Revolution

GigaIO redefines scalable AI infrastructure, delivering edge-to-core AI platforms for all accelerators that are easy to deploy and manage:

RESEARCH PAPER PRESENTATION

Rail-optimized PCIe Topologies for LLMs

Scaling LLMs efficiently requires innovative approaches to GPU interconnects, and GigaIO’s rail-optimized, PCIe-based AI fabric topologies offer up to 3.7x improved collective performance with an accelerator-agnostic design, ensuring adaptability across diverse AI workloads. This paper explores optimized network architectures for large language model training and inference.

Thursday, 12 June, 2025 — 9:00 AM to 9:25 AM

Hall F — 2nd floor

Senior Network Software Engineer,

GigaIO

Artificial Intelligence Manager,

GigaIO

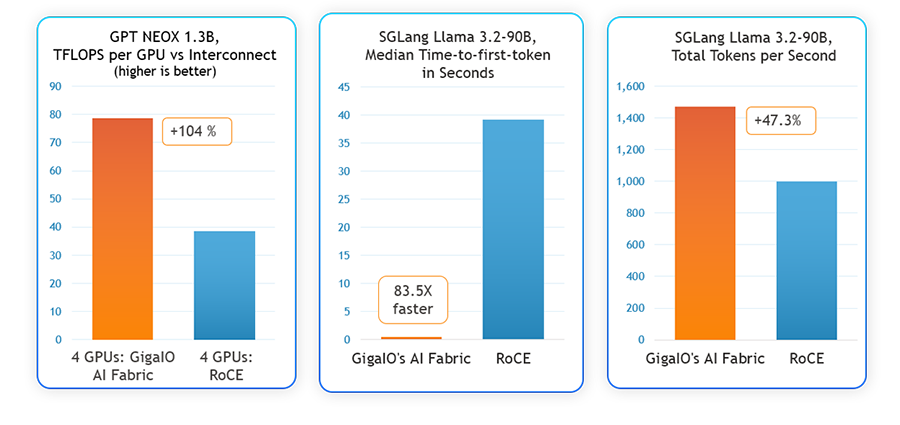

Breakthrough

AI Performance:

2x Faster Training and Fine Tuning with 83.5x Lower Latency

As AI models grow more complex, many teams face a critical bottleneck they didn’t anticipate: interconnect inefficiency.

Want smarter interconnects for power-constrained AI?

See how our AI fabric outperforms RoCE across NVIDIA A100 and AMD MI300X on training and inference benchmarks.

Our latest benchmarks reveal that GigaIO’s AI fabric outperforms traditional RoCE Ethernet in every AI workload, empowering organizations to:

Gryf: The World’s First Suitcase-sized AI Supercomputer

Run AI jobs anywhere with Gryf, the world’s first portable AI supercomputer that brings datacenter-class computing power directly to the edge. Co-designed with SourceCode to be airline cabin-friendly, Gryf is purpose-built for go-anywhere insights, allowing users to quickly transform the vast amounts of sensor data collected at the edge into actionable solutions.

Come meet Gryf at our booth.

SuperNODE: The World’s Most Powerful Scale-up AI Platform

Reduce power draw with SuperNODE, the world’s most powerful and energy-efficient scale-up AI computing platform. SuperNODE streamlines inference and fine-tuning with a single, unified memory space, and its open architecture supports NVIDIA and non-NVIDIA GPUs as well as inference cards. Check out its record-breaking MLPerf Inference benchmark performance!

Check out the latest test results at our booth.

GigaIO’s Next-Gen AI Fabric

Smarter interconnects can have a transformative impact on AI infrastructure. GigaIO’s patented AI fabric provides ultra-low latency and direct memory-to-memory communication between accelerators. In recent benchmarks, it achieved better results than RoCE across the entire AI work chain, including 2x faster training and fine-tuning and 83x better time to first token for inferencing.

Learn more at our booth.