Breakthrough

AI Performance:

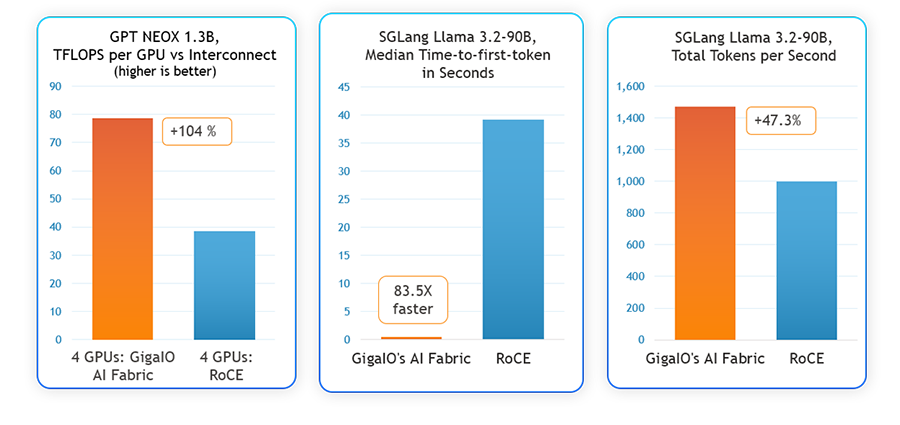

2x Faster Training and Fine Tuning with 83.5x Lower Latency

As AI models grow more complex, many teams face a critical bottleneck they didn’t anticipate: interconnect inefficiency.

Our latest benchmarks reveal that GigaIO’s AI fabric outperforms traditional RoCE Ethernet in every AI workload, empowering organizations to:

Want smarter interconnects for power-constrained AI? See how our AI fabric outperforms RoCE across NVIDIA A100 and AMD MI300X on training and inference benchmarks.

![]()

Power Constraints Solved

With GigaIO’s AI fabric, you achieve target performance using fewer GPUs — directly addressing data center power limits. Our tests show RoCE systems require 35-40% more hardware (and energy) to match our AI fabric’s performance.

![]()

Inference at Scale

For models like Llama 3.2-90B Vision Instruct, our AI fabric delivers 83.5x faster response times under load. Chatbots, vision systems, and RAG pipelines respond in milliseconds, not seconds.

![]()

No More Tradeoffs

Unlike RoCE, GigaIO’s AI fabric eliminates protocol overhead and complex RDMA tuning. As Lamini CTO Greg Diamos notes: “With GigaIO, we spend less time on infrastructure and more time optimizing LLMs.”

![]()

The Bottom Line

Our AI fabric isn’t just faster — it’s cheaper to deploy and operate. By removing expensive NICs, switches, and redundant GPUs, teams report 30-40% lower TCO within the first year.