Test Results for GigaIO SuperNODE™

Sign up below and you’ll be the first to know of the latest SuperNODE test results.

AI-Specific Test Results

The video below showcases the power of 32 GPUs on a SuperNODE: Summarizing 82 articles per second utilizing MK1 Flywheel inference engine!👇

When running training workloads such as Llama and ResNet-50, SuperNODE continues to exhibit perfect scaling.

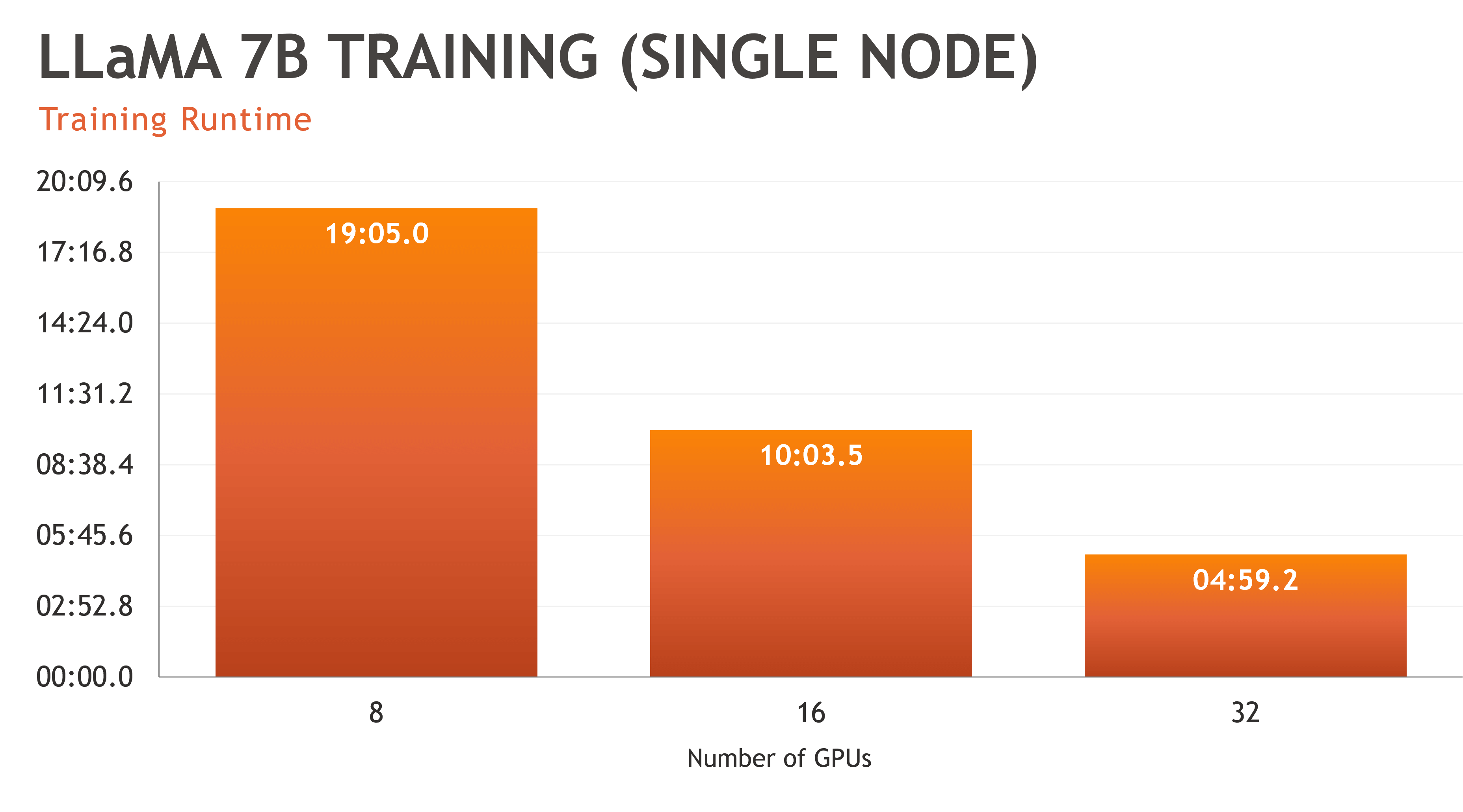

Llama 7B is part of the LLaMA (Large Language Model Meta AI) family of autoregressive large language models (LLMs), released by Meta AI starting in February 2023. “7B” refers to 7 billion parameters.

The graph below shows the gain in time-to-results when adding more GPUs to a single node — from 19 minutes with a standard 8-GPU server configuration to less than five minutes on a SuperNODE with 32 GPUs.

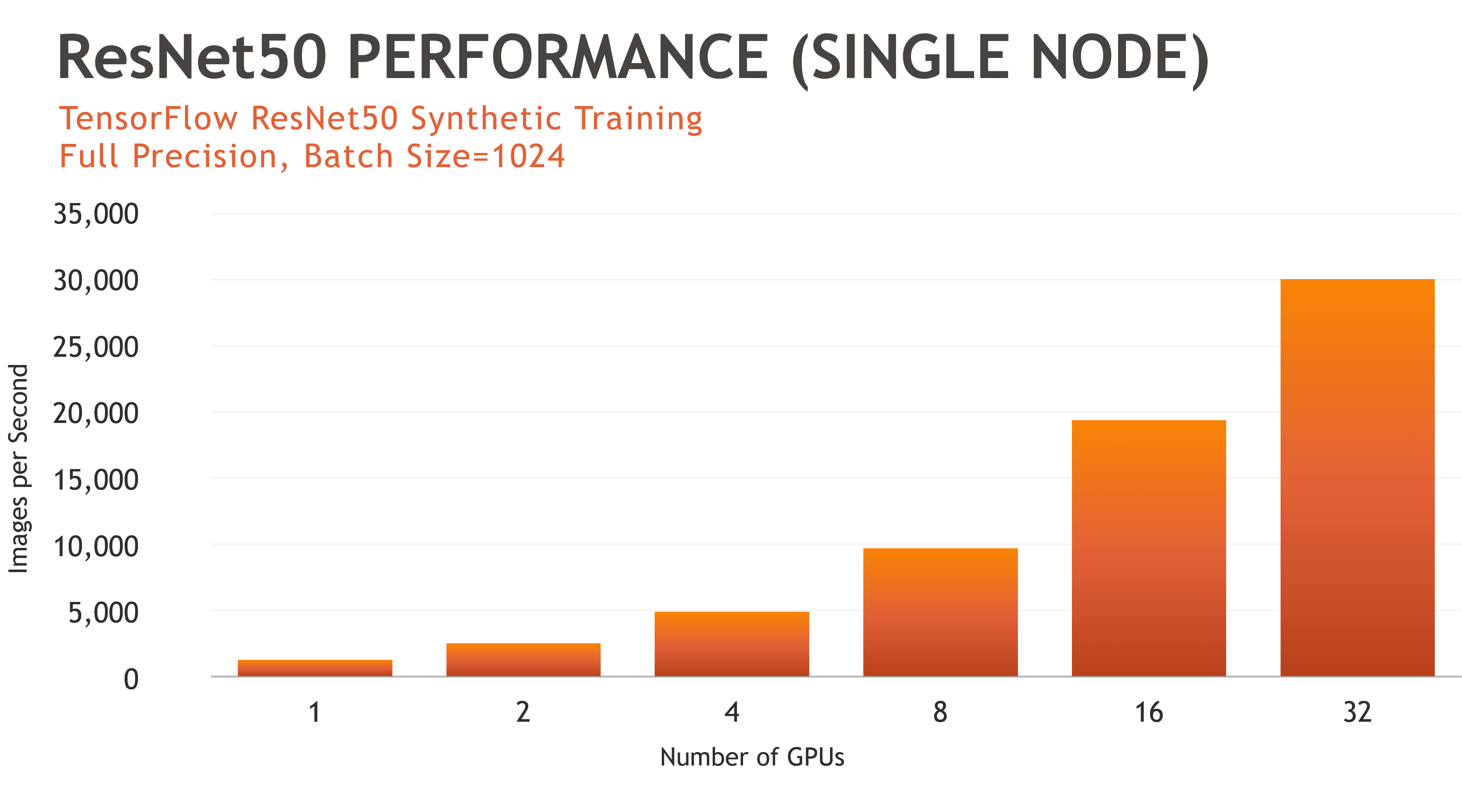

ResNet stands for Residual Network and is a specific type of convolutional neural network (CNN) introduced in the 2015 paper “Deep Residual Learning for Image Recognition” by He Kaiming, Zhang Xiangyu, Ren Shaoqing, and Sun Jian. CNNs are commonly used to power computer vision applications.

ResNet-50 is a 50-layer convolutional neural network (48 convolutional layers, one MaxPool layer, and one average pool layer). Residual neural networks are a type of artificial neural network (ANN) that forms networks by stacking residual blocks.

The graph below shows the increase in images per second as GPUs are added to a SuperNODE

High Performance Computing Specific Test Results

Over the weekend I got to test #FluidX3D on the world's largest #HPC #GPU server, #GigaIOSuperNODE. Here is one of the largest #CFD simulations ever, the Concorde for 1s at 300km/h landing speed. 40 *Billion* cells resolution. 33h runtime on 32 @AMDInstinct MI210, 2TB VRAM.

— Dr. Moritz Lehmann (@ProjectPhysX) August 1, 2023

🧵1/5 pic.twitter.com/it6EPsrr1g

Initial Validation Test Results

GigaIO’s SuperNODE system was tested with 32 AMD Mi210 GPUs on a Supermicro 1U server with dual AMD Milan processors.

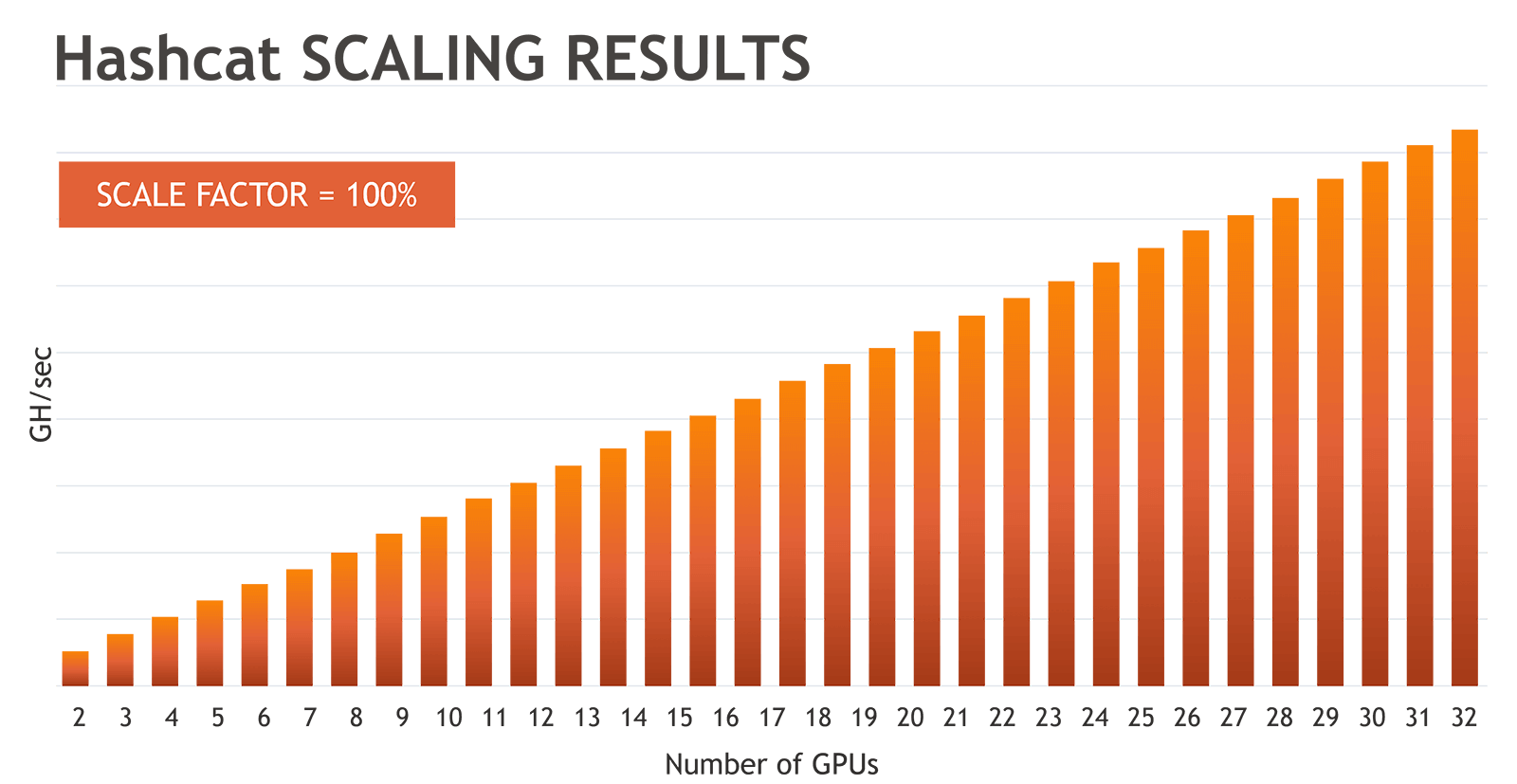

- Hashcat: Workloads that utilize GPUs independently, such as Hashcat, scale perfectly linearly all the way to the 32 GPUs tested.

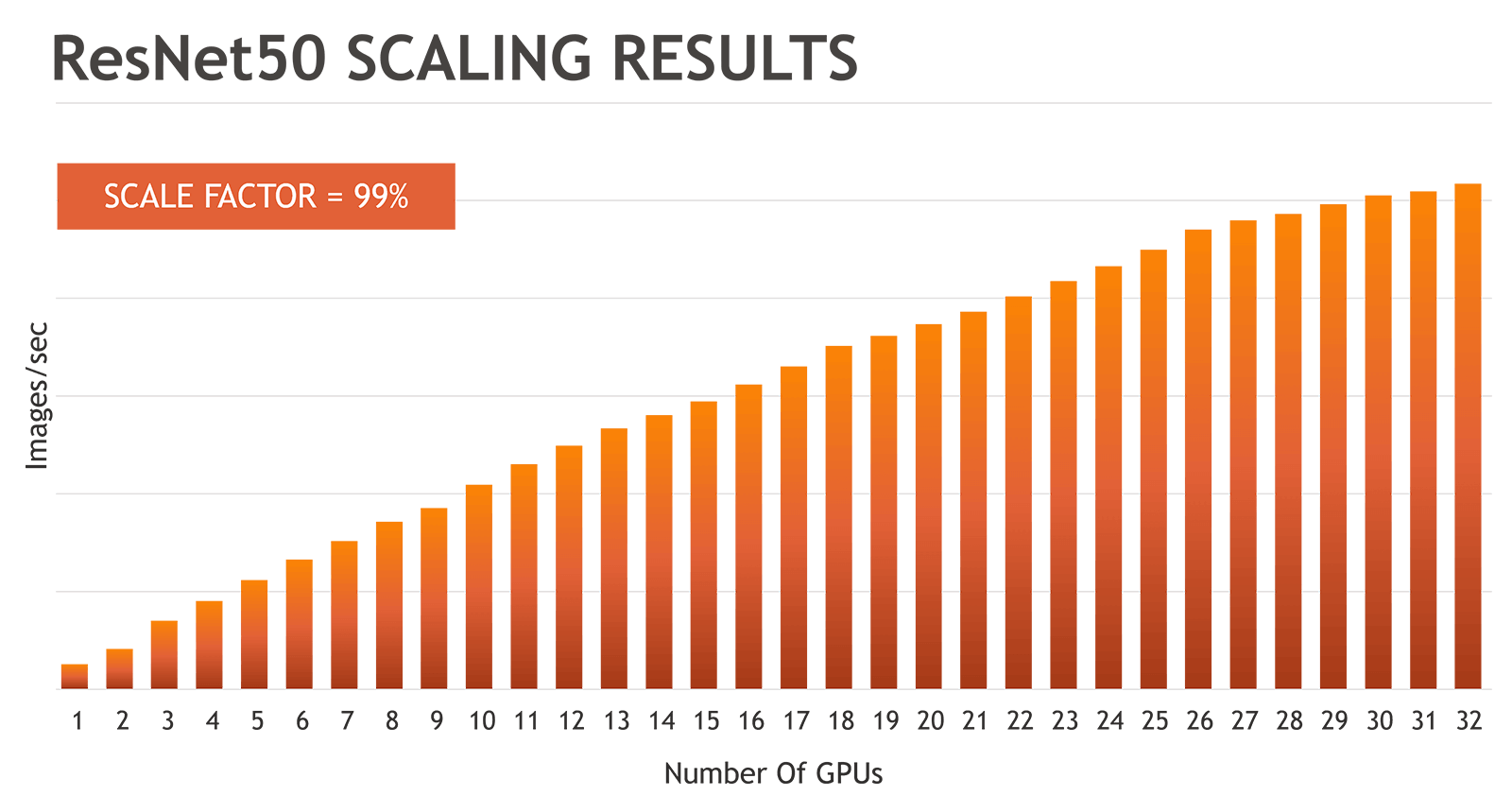

- ResNet50: For workloads that utilize GPU Direct RDMA or peer-to-peer, such as ResNet50, the scale factor is slightly reduced as the GPU count rises. There is a one percent degradation per GPU, and at 32 GPUs, the overall scale factor is 70 percent.

These results demonstrate significantly improved scalability compared to the legacy alternative of scaling the number of GPUs using MPI to communicate between multiple nodes. When testing a multi-node model, GPU scalability is reduced to 50 percent or less.

The following charts show two real-world examples of these two use cases:

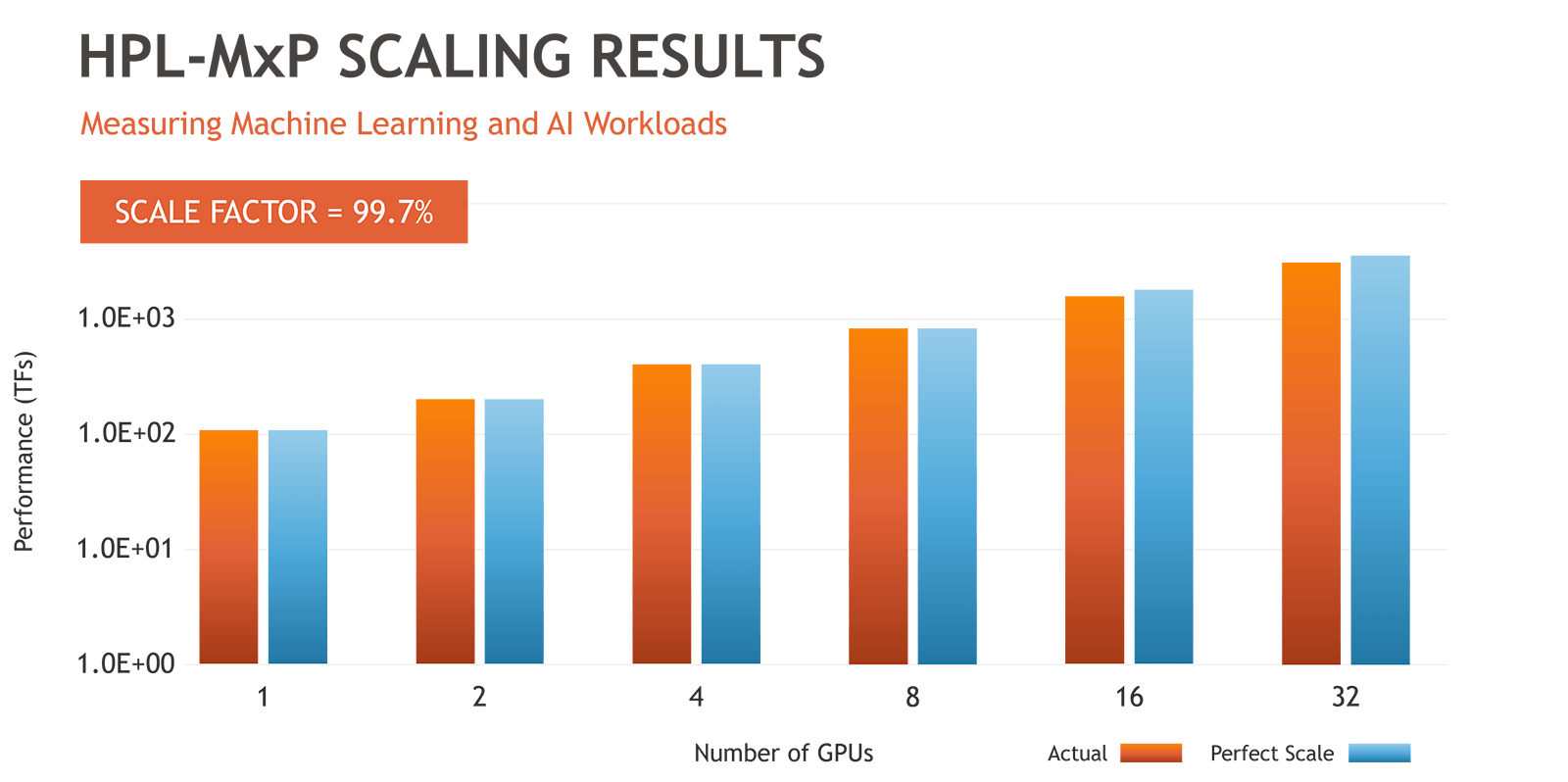

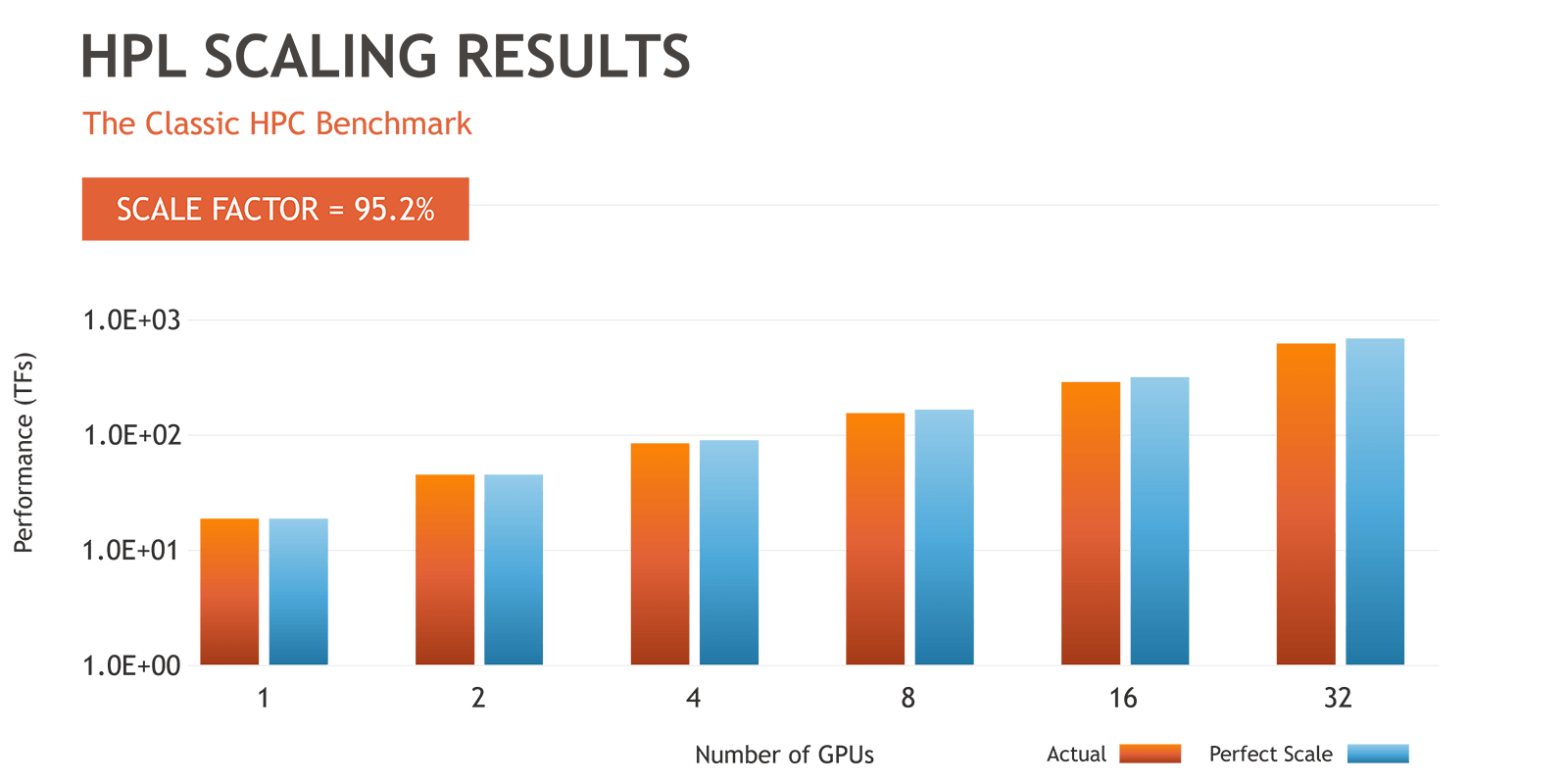

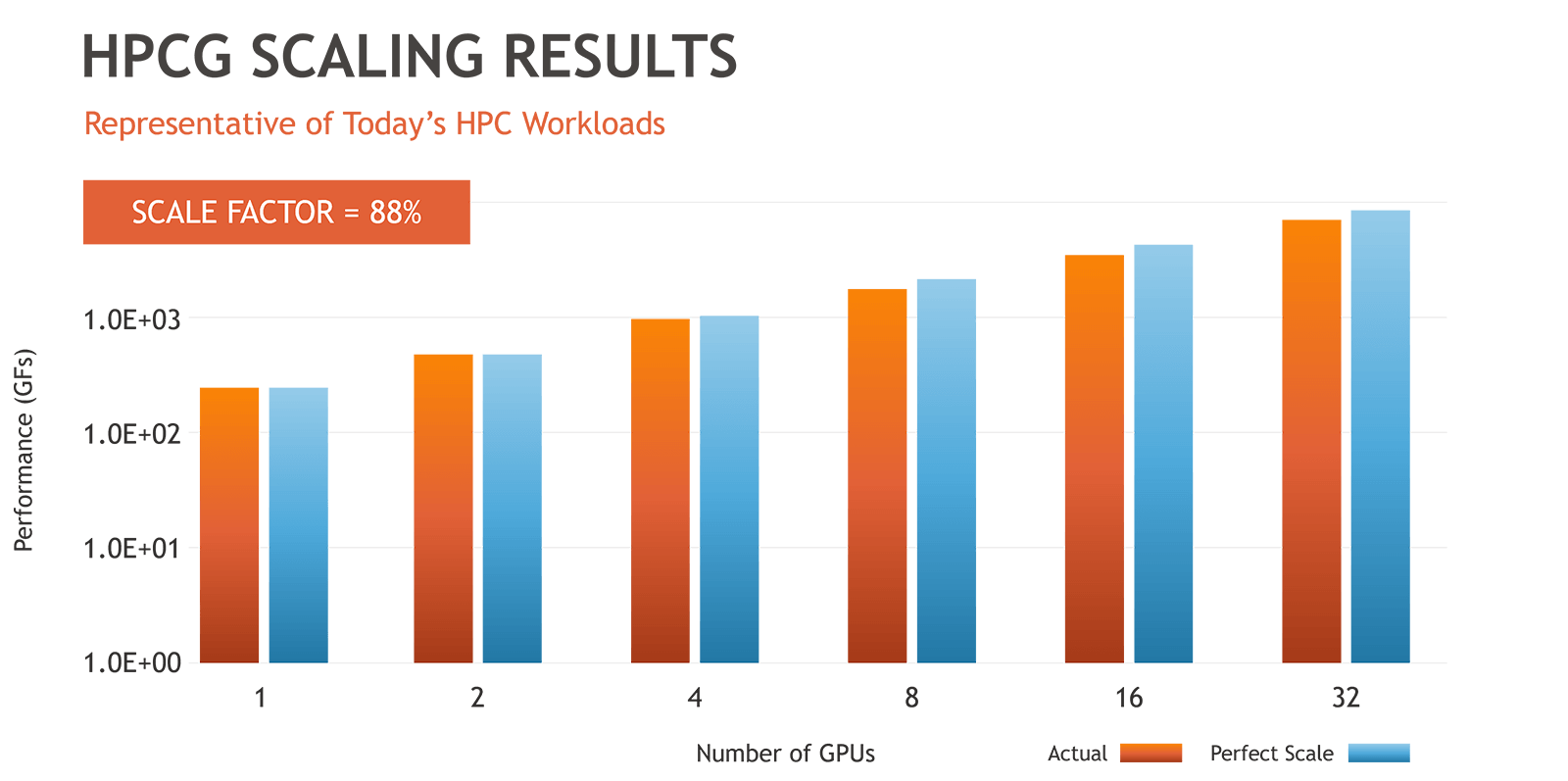

Several Top500 benchmarks for supercomputer performance testing also demonstrated extraordinary performance:

- HPL-MxP showed excellent scaling and reduced precision compute bandwidth running on the SuperNODE, achieving 99.7% of ideal theoretical scaling.

- HPL testing resulted in 95.2% of ideal theoretical scaling.

- HPCG showed 88% scaling, an excellent result for memory scaling.

Democratizing Access to AI and HPC’s Most Expensive Resources

The alternative off-the-shelf systems offering SuperNODE’s accelerator-centric performance are impractical, if not prohibitive, for most organizations.

- SuperNODE drastically reduces AI costs: A 32-GPU deployment with a standard 4-GPU-to-server configuration would require a total of eight servers, at an average cost of $25,000 apiece ($175,000) — not including the cost of the GPUs. Eliminating additional per-node software licensing costs results in additional savings.

- SuperNODE delivers significant savings on power consumption and rack space: Eliminating seven servers saves approximately 7KW, with additional power savings in associated networking equipment — all while increasing system performance. Compared to 4-GPU servers, SuperNODE delivers a 30% reduction in physical rack space (23U vs. 32U).

- SuperNODE keeps code simple: An eight-server, 32-GPU system would require significant rewrites of higher order application code in order to scale data operations, further adding complexity and cost to deployment.

- SuperNODE shortens time-to-results: Eliminating the need to connect multiple servers via legacy networks using MPI protocol, and replacing them with native intra-server peer-to-peer capabilities, delivers significantly better performance.

- SuperNODE provides the ultimate in flexibility: When workloads only need a few GPUs, or when several users need accelerated computing, SuperNODE GPUs can easily be distributed across several servers.

Additional benchmark results will continue to be posted on this page

Our engineering team continues to work with various technology partners to run and validate benchmark tests to demonstrate the benefits of SuperNODE in various applications. Sign up below if you’d like to be the first to hear when new benchmarks are published.