Imagine a Beowulf Cluster of SuperNODEs … (They did)

Note: This article originally appeared on hpcwire.com, click here to read the original piece.

By Doug Eadline

December 6, 2023

Clustering resources for faster performance is not new. In the early days of clustering, the Beowulf project demonstrated that high performance was achievable from commodity hardware. These days, the “Beowulf cluster meme” gets used every time some new technology is deployed. For instance, “Imagine a Beowulf cluster of Frontier systems.” Funny enough, but a little closer to reality regarding the recent announcement by GigaIO and TensorWave.

GigaIO introduced the first 32 GPU single-node supercomputer, the SuperNODE™, in June of this year. The SuperNode won two coveted HPCwire Editors’ Choice Awards at Supercomputing 2023 in Denver last month: Best AI Product or Technology and Top 5 New Products or Technologies to Watch. HPCwire has reported on the performance of the 32 GPU GigaiO superNODE and the 64-GPU SuperDuperNODE. Now, it seems the “imagine a Beowulf cluster of these” has been taken to heart by GigaIO and TensorWave.

Today, GigaIO announced the most significant order yet for its flagship SuperNODE™, which will eventually utilize tens of thousands of the AMD Instinct MI300X accelerators that are also launching today at the AMD “Advancing AI” event. GigaIO’s novel infrastructure will form the backbone of a bare-metal specialized AI cloud code named “TensorNODE,” to be built by cloud provider TensorWave for supplying access to AMD data center GPUs, especially for use in LLMs.

As stated in an interview with GigaIO’s Chief Technical Officer, Global Sales, Matt Demas, “We took our SuperNODE and created a large cluster for TensorWave.” Each SuperNODE has two servers for redundancy and has access to all GPU memory across the TensorNODE. There is also a large amount of scratch disk available on each TensorNODE.

The TensorNODE deployment will build upon the GigaIO SuperNODE architecture to a far grander scale, leveraging GigaIO’s PCIe Gen-5 memory fabric to provide a more straightforward workload setup and deployment than is possible with legacy networks and eliminating the associated performance tax.

TensorWave will use GigaIO’s FabreX to create the first petabyte-scale GPU memory pool without the performance impact of non-memory-centric networks. The first installment of TensorNODE is expected to be operational starting in early 2024 with an architecture that will support up to 5,760 GPUs across a single FabreX memory fabric domain. Extremely large models will be possible because all GPUs will have access to all other GPUs VRAM within the domain. Workloads can access more than a petabyte of VRAM in a single job from any node, enabling even the largest jobs to be completed in record time. Throughout 2024, multiple TensorNODEs will be deployed.

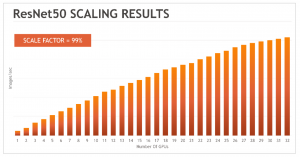

TensorNODE is an all-AMD solution featuring 4th Gen AMD CPUs and MI300X accelerators. The expected performance of the TensorNODE is made possible by the MI300X, which delivers 192GB of HBM3 memory per accelerator. The memory capacity of these accelerators, combined with GigaIO’s memory fabric, which allows for near-perfect scaling with little performance degradation, solves the challenge of underutilized or idle GPU cores due to distributed memory models.

TensorWave is excited to bring this innovative solution to market with GigaIO and AMD,” said Darrick Horton, CEO of TensorWave. “We selected the GigaIO platform because of its superior capabilities, in addition to GigaIO’s alignment with our values and commitment to open standards. We’re leveraging this novel infrastructure to support large-scale AI workloads, and we are proud to be collaborating with AMD as one of the first cloud providers to deploy the MI300X accelerator solutions.”

The composable nature of GigaIO’s dynamic infrastructure provides TensorWave with unique flexibility and agility over standard static infrastructure; as LLMs and AI users need to evolve, the infrastructure can be tuned on the fly to meet current and future needs. Additionally, TensorWave’s cloud will be greener than alternatives by eliminating GPU server hosts (often 4-8 GPUs per server) and associated networking equipment, saving cost, complexity, space, water, and power.

“We are thrilled to power TensorWave’s infrastructure at scale by combining the power of the revolutionary AMD Instinct MI300X accelerators with GigaIO’s AI infrastructure architecture, including our unique memory fabric, FabreX. This deployment validates our pioneering approach to reimagining data center infrastructure,” said Alan Benjamin, CEO of GigaIO. “The TensorWave team brings a visionary approach to cloud computing and a deep expertise in standing up and deploying very sophisticated accelerated data centers.”

Given the appetite for memory by GenAI models, the significant memory size and bandwidth offered by GigaIO and AMD should make the TensorWave TensorNode attractive to many customers who are building and offering AI solutions in the cloud.

View source version on hpcwire.com: https://www.hpcwire.com/2023/12/06/imagine-a-beowulf-cluster-of-supernodes-they-did/