A Tale of Two LLM Architectures: The Simplicity of the GigaIO SuperNODE™ vs. the Complexity of an InfiniBand Server Farm

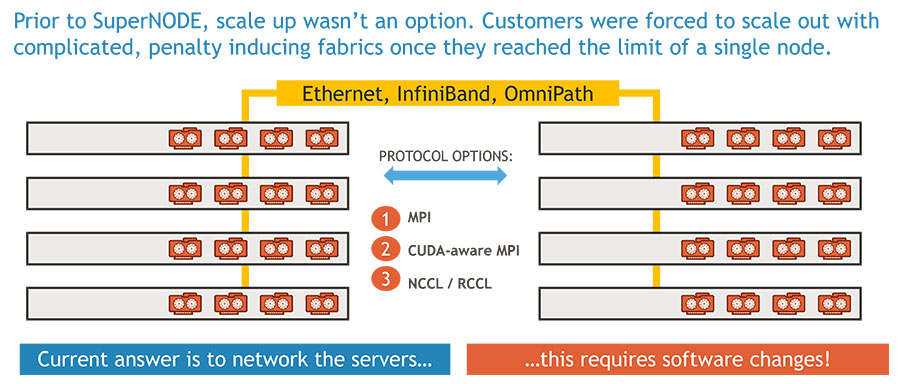

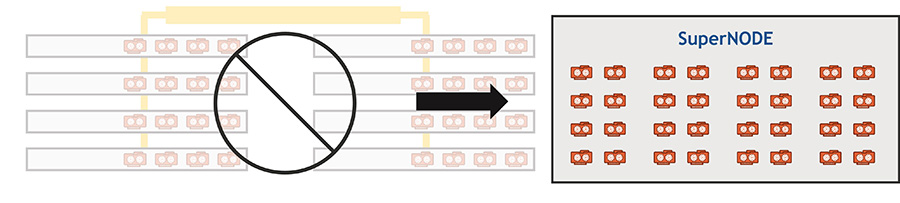

In the rapidly evolving world of artificial intelligence and machine learning, the demand for high-performance computing resources has never been greater. As the complexity of Large Language Models (LLMs) continues to skyrocket, organizations are faced with a crucial decision — do they opt for the conventional approach of stringing together multiple servers with a handful of GPUs apiece, over InfiniBand or Ethernet, or do they take advantage of the innovative GigaIO SuperNODE, a single server packed with an astounding 32 GPUs?

According to one of our customers, an LLM platform provider, the choice comes down to the sheer simplicity and ease of management offered by the GigaIO SuperNODE. “It’s all about the time saved,” they explained. “With a conventional setup, you’re dealing with the complexity of setting up networking to manage multiple servers, ensuring they’re all properly configured, and troubleshooting any issues that arise. But with the SuperNODE, we get to spend less time focused on infrastructure so we can get to the optimizing faster.”

Faster Time to Model Deployment

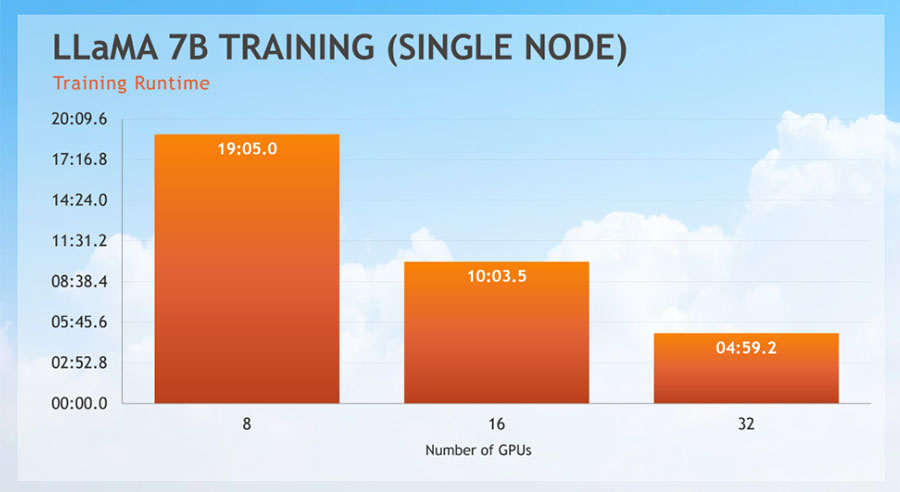

When it comes to deploying and optimizing LLMs, the GigaIO SuperNODE architecture makes a lot of sense. “With a conventional setup, you’d have to distribute your training and inference workloads across multiple servers, which adds significant complexity and can slow down the process,” our customer explained. “But with the SuperNODE, we can harness the full power of all 32 GPUs in one place, allowing us to get our LLMs up and running much faster.”

Faster time-to-results is particularly beneficial for organizations that have urgent deadlines or need to rapidly iterate on their models. “Less time messing with infrastructure means more time focused on the actual work of training and fine-tuning our LLMs,” our customer noted. “It’s a huge advantage in today’s fast-paced AI landscape.”

The Benefits of Consolidation

The traditional approach of relying on a fleet of servers, each with a modest number of GPUs, can quickly become unwieldy. Coordinating software deployments, updates, and maintenance across multiple machines requires significant time and effort, not to mention the potential for compatibility issues and bottlenecks in the network infrastructure.

In contrast, the GigaIO SuperNODE offers a streamlined solution. By consolidating all 32 GPUs into a single, high-powered server, organizations can significantly simplify their infrastructure and reduce administrative overhead. “Instead of juggling several servers, we have a single point of management,” our customer shared. “It’s a game-changer in terms of efficiency and productivity.”

Optimizing for Large Language Models

The GigaIO SuperNODE is specifically designed to cater to the unique demands of large language models. These complex AI systems require massive amounts of computational power and memory, which can be a challenge to accommodate in a traditional server environment.

“With the SuperNODE, we don’t have to worry about hitting memory or CPU limits,” our customer shared. “The sheer number of GPUs and associated unified VRAM, and the high-speed FabreXTM interconnect between them gives us the resources we need to train and run our LLMs at scale, without the bottlenecks we’d experience with a more fragmented setup.” FabreX is GigaIO’s AI memory fabric that seamlessly composes rack-scale resources and the “secret sauce” behind the SuperNODE.

This level of performance and scalability is crucial for organizations looking to push the boundaries of what’s possible with LLMs. Whether it’s fine-tuning massive models, exploring new architectural innovations, or experimenting with novel training techniques, the GigaIO SuperNODE provides the computational horsepower and flexibility to support these cutting-edge initiatives.

Simplicity Breeds Productivity

At the heart of the SuperNODE’s appeal is its simplicity. By consolidating all 32 GPUs into a single server, organizations can streamline their infrastructure, reduce administrative overhead, and focus their efforts on the core business of AI development and deployment.

“It’s all about getting out of the way of the technology,” our customer emphasized. “With the conventional approach, you’re constantly fighting against the complexity of your setup, trying to keep everything running smoothly. But with the SuperNODE, that complexity is gone, and we can just get on with the work of building and optimizing our LLMs.”

This simplicity translates directly into increased productivity and faster time-to-market for AI-driven initiatives. “We’re able to iterate on our models more quickly, experiment with new techniques more freely, and ultimately deliver better results to our stakeholders,” our customer shared. “It’s a profound shift in how we approach AI development, and it’s all made possible by the GigaIO SuperNODE.”

The SuperNODE’s Unbeatable Performance

Beyond simplicity and ease of use, the GigaIO SuperNODE also delivers a significant performance advantage. “The sheer number of high-memory GPUs and the seamless way they’re integrated means we get incredible throughput and scalability for our large language models,” our customer explained. “It performs so much better than trying to stitch together a patchwork of servers — it’s really no contest.” Ultimately, the choice comes down to a simple equation. “It’s a hell of a lot simpler to use, and I get a performance advantage, so why wouldn’t I use it?” our customer concluded. With the power and efficiency of the GigaIO SuperNODE, organizations have a clear and compelling path to unlocking the full potential of their large language model initiatives. It’s a true no-brainer.

The Future of AI Infrastructure

As the demand for LLMs and other large-scale AI models continues to grow, the need for high-performance, streamlined computing infrastructure will only become more pressing. The GigaIO SuperNODE represents a glimpse into the future of AI infrastructure, where simplicity, scalability, and raw computational power converge to enable organizations to push the boundaries of what’s possible in the world of artificial intelligence.

“The GigaIO SuperNODE is a game-changer because it allows us to focus on the work that really matters — the research, the development, and the optimization,” our customer concluded. “And in a field as dynamic and fast-paced as AI, that’s an invaluable advantage.”

Whether you’re a research institution, a tech giant, or a cutting-edge startup, the GigaIO SuperNODE offers a compelling solution to the challenges of large language model development and deployment. By prioritizing simplicity and performance, it empowers organizations to unlock the full potential of their AI initiatives and stay ahead of the curve in an increasingly competitive landscape.