GigaIO SuperNODE™

The World’s First 32 GPU Single-node AI Supercomputer for Next-Gen AI and Accelerated Computing

- We Significantly Shorten Their LLM Development Time

Developers can focus on model creation without the hassle of scaling across multiple servers, speeding up the deployment of LLMs. “It’s EASY to scale with GigalO!” - By Breaking the 8 GPU Server Limit

We overcome traditional limitations by providing a seamless, scalable computing environment, free from the complexities and high costs of InfiniBand. - We deliver leadership price-performance

Cost-effective, high-performance Al computing, making advanced technology more accessible and more profitable. - We are Ready for Immediate Deployment

Our solution is available now, allowing clients to leverage these benefits without delay.

A New Era of Disaggregated Computing

Technologies that reduce the number of required node-to-accelerator data communications are crucial to providing the stripped-down horsepower necessary for a robust AI infrastructure.

The GigaIO SuperNODE can connect up to 32 AMD or NVIDIA GPUs to a single node at the same latency and performance as if they were physically located inside the server box. The power of all these accelerators, seamlessly connected by GigaIO’s transformative PCIe memory fabric, FabreX, can now be harnessed to drastically speed up time to results.

The SuperNODE is a simplified system capable of scaling multiple accelerator technologies such as GPUs and FPGAs without the latency, cost, and power overhead required for multi-CPU systems.

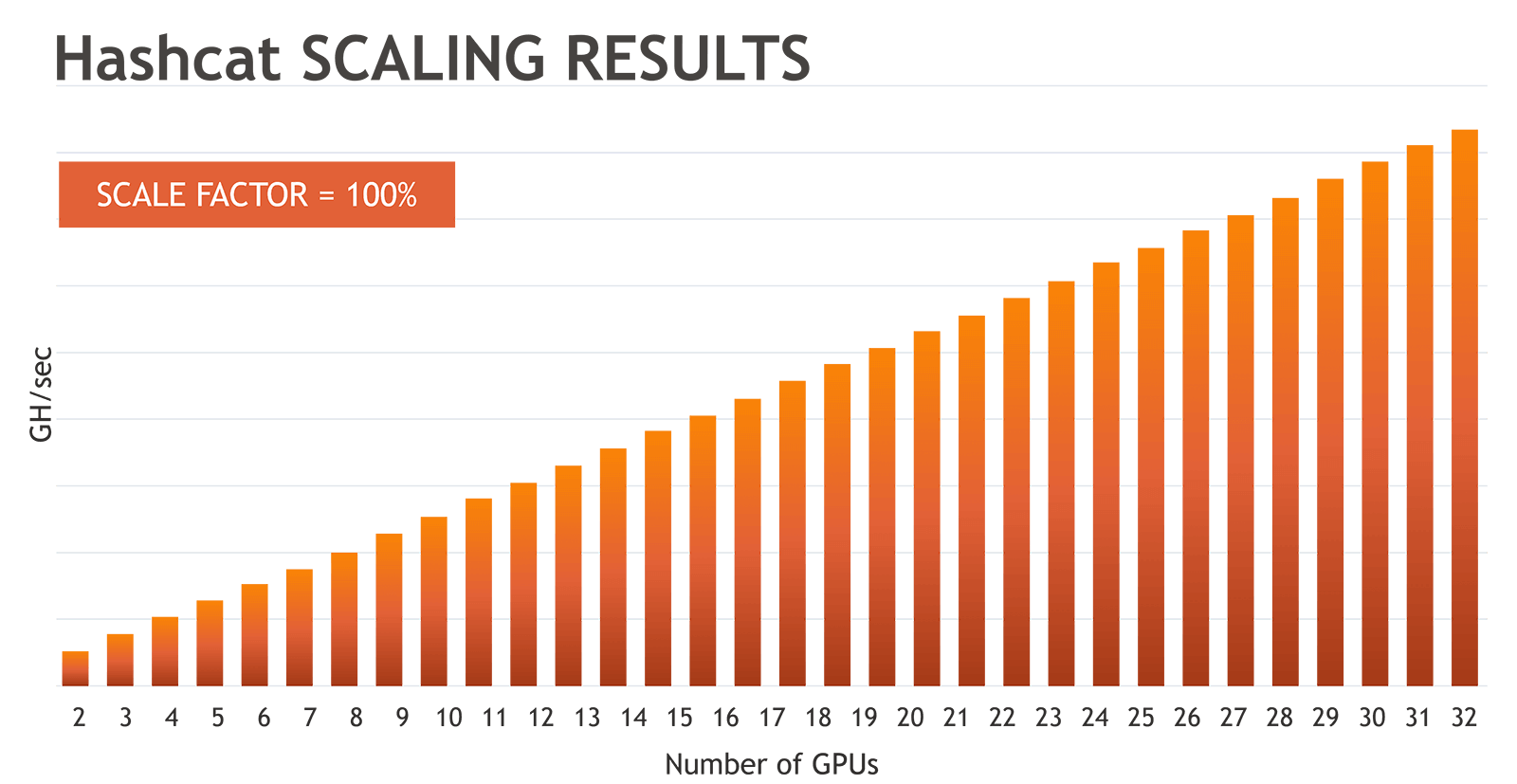

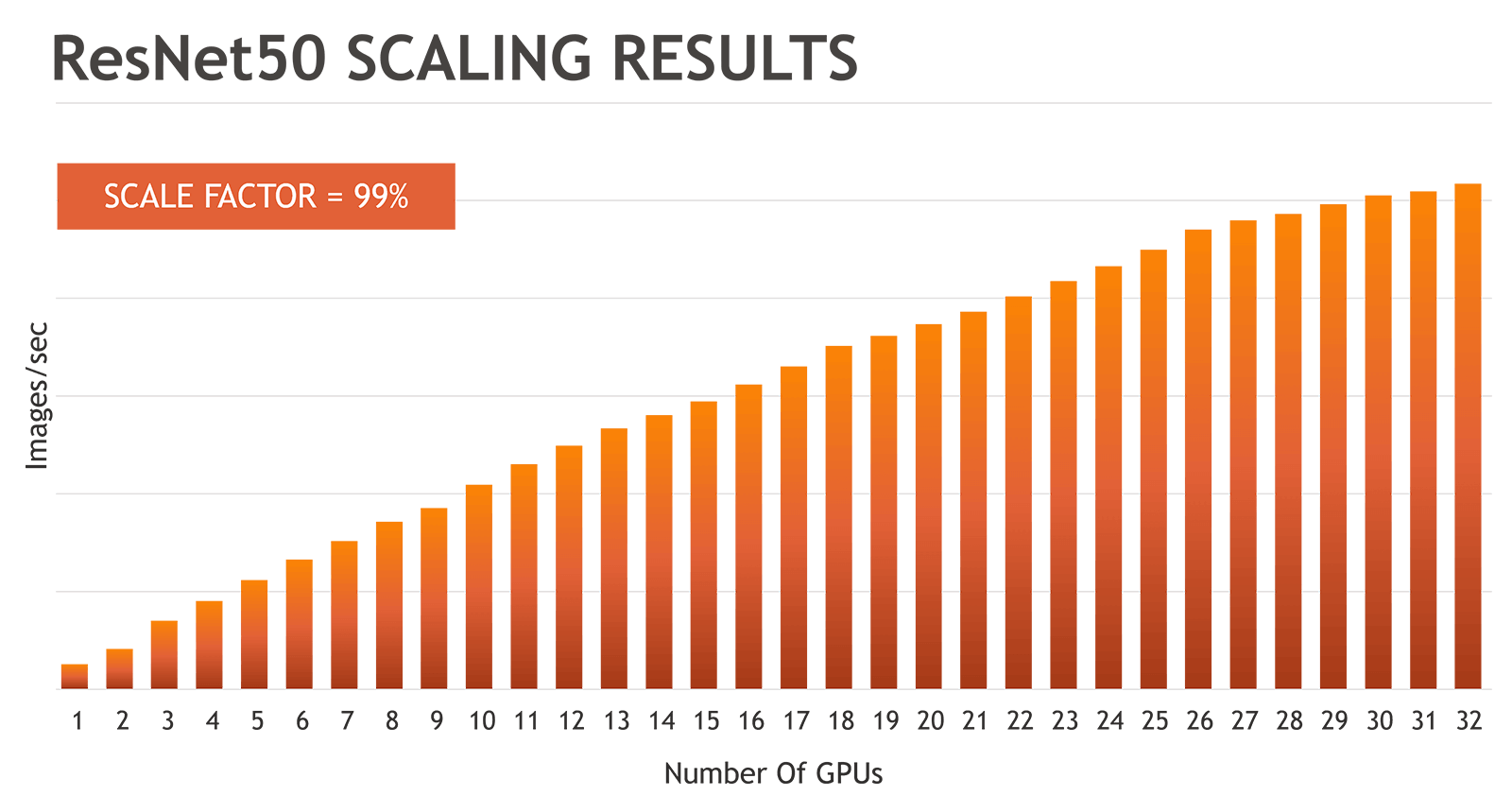

See For Yourself

Tested with 32 AMD Instinct™ MI210 GPUs on a 1U server with dual AMD EPYC™ “Milan” processors connected over GigaIO FabreX™.

Made Possible by FabreX

The GigaIO SuperNODE is powered by FabreX, GigaIO’s transformative high-performance AI memory fabric. In addition to enabling unprecedented device-to-node configurations, FabreX is also unique in making possible node-to-node and device-to-device communication across the same high-performance PCIe memory fabric. FabreX can span multiple servers and multiple racks to scale up single-server systems and scale out multi-server systems, all unified via the FabreX software.

Resources normally located inside of a server — including accelerators such as GPUs and FPGAs, storage, and even memory — can now be pooled in accelerator or storage enclosures, where they are available to all of the servers in the system. These resources and servers continue to communicate over the FabreX native PCIe memory fabric for the lowest possible latency and highest possible bandwidth performance, just as they would if they were still plugged into the server motherboard.

AI and Accelerated Computing Challenges

- Large Language Model (LLMs) and Generative AI need large numbers of GPUs

- Standard server configurations restrict GPUs to what fits inside the server sheet metal

- Fixed server architectures result in lower utilization rates of expensive and hard to come by accelerators

- Networking fixed configuration GPU servers over legacy networks increases latency thus reducing performance

Want to try your own code on SuperNODE for free?

Request access to our lab.The Solution

GigaIO SuperNODE™ with FabreX™ Dynamic Memory Fabric

- Connects up to 32 AMD Instinct™ MI210 GPUs or 24 NVIDIA A100s and up to 1PB storage to a single off-the-shelf server

- Enables lower power, adaptive GPU supercomputing

- Delivers the ability to train large models with tools like PyTorch or TensorFlow, scaling via peer-to-peer communication on a single node, instead of MPI over several nodes

- Accelerators can be split over several servers, and scale using node-to-node communication for larger data sets

Benefits of GigaIO SuperNODE

- Shorten time-to-results with single-node code getting vastly more compute power

- Keep code simple: use your existing software without any changes – “It just works”

- Secure the ultimate flexibility for any workload: unprecedented power in “BEAST mode,” flexible configurations in “SWARM mode,” or shared resources in “FREESTYLE mode

- A single node solution reduces network overhead, cost, latency, and server administration

- Save on power consumption (7KW per 32-GPU deployment)

- Save on rack space (30% per 32-GPU deployment)

Unprecedented Compute Capability Available Now

Available today for emerging AI and accelerated computing workloads, the SuperNODE engineered solution, part of the GigaPod family, offers both unprecedented accelerated computing power when you need it, and the ultimate in flexibility and in accelerator utilization when your workloads only require a few GPUs.